Diffusion models, first proposed by the paper “Denoising Diffusion Probabilistic Models” by J. Ho et al. (2020), now has been used at various of downstream task such as:

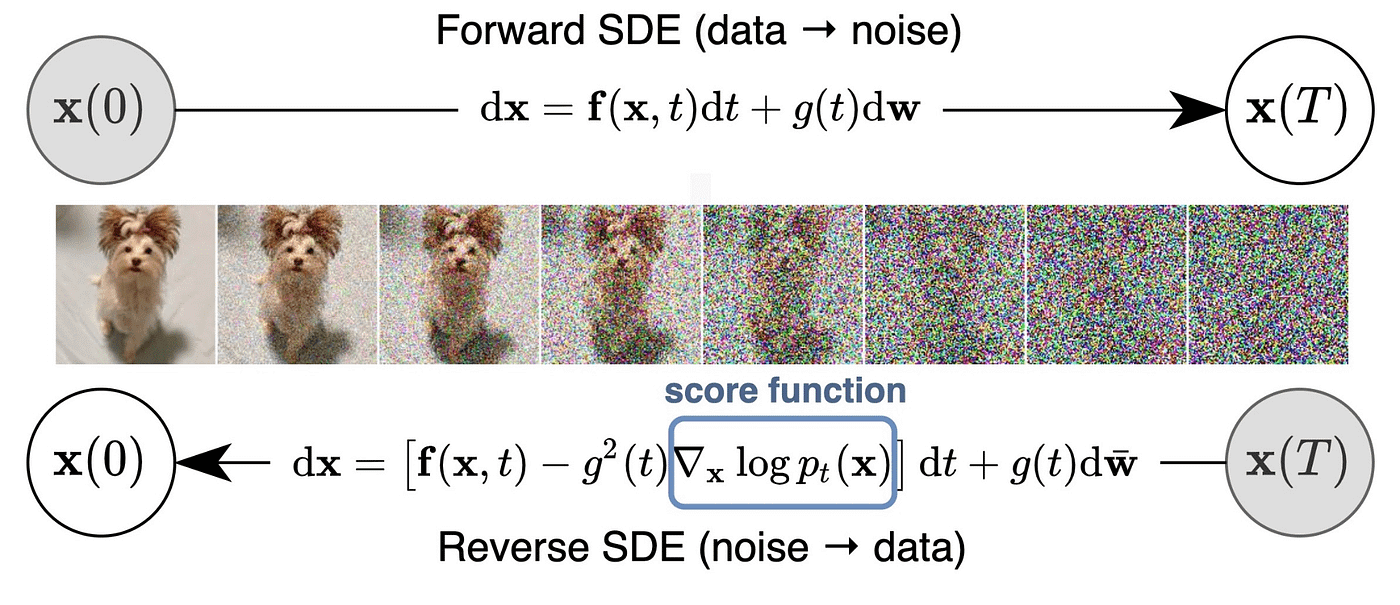

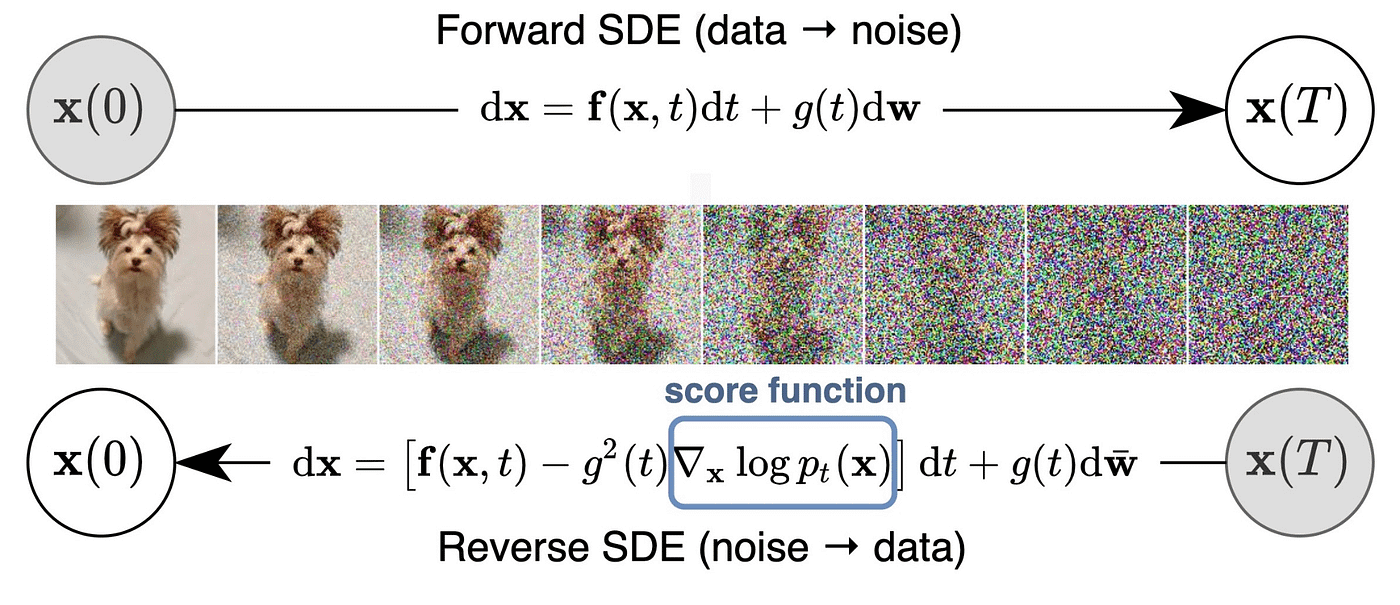

As shown above, a diffusion model consists of two phases:

The key that diffusion model can be used at various generation task is that it can learn how to generate data (like images) from random noise (which is cheap) by reversing a step-by-step noise-adding process.

Text-to-image is one of the most popular applications of diffusion model, whose task is to generate image according to a text prompt.

A text-to-image diffusion model is a conditional diffusion model, where the generation process is conditioned on textual input. Instead of generating images purely from random noise, the model is guided by text embeddings (numerical representations of text prompts) at each step of the denoising process.

Text Embeddings: text embeddings are numerical representations of the meaning of a given text (e.g., “A white cat with blue eyes”). Text embeddings are usually generated by a pre-trained language model (usually Contrastive Language-Image Pretraining a.k.a. CLIP model). Then the encoded text can affect the generation process in specific way (I wish to write another blog to discuss CLIP model and how text and image interact with each other 🧐).

If you are learner for computer vision just like me, I would suggest you to start with fine-tuning a stable diffusion model to get the taste of diffusion model. A well-established repository for training / fine-tuning a stable diffusion model would be 🤗 Diffusers by Hugging Face. Take myself as an example, my first try on diffusion model is training a LoRA Stable Diffusion model using this script diffusers/examples/text_to_image/train_text_to_image_lora.py

One can easily get fammiliar with some basic concepts by fine-tuning a stable diffusion model by following the instruction of README.md under the same directory with the fine-tuning script.